Use-of-force incidents are reviewed under intense scrutiny. Traditional review relies on reports, video playback, and testimony—often after outcomes are already known. This can unintentionally introduce hindsight bias and outcome-based judgments that were never available to officers in real time.

Why Outcome-Based Review Can Be Misleading

In real time, officers make decisions with limited information, extreme time pressure, and rapidly changing conditions. Reviewing decisions solely through the lens of the final outcome can distort what was reasonably perceived at the moment force was used.

AI-Generated Alternate Outcomes

Force Outcomes uses AI to generate controlled, advisory “what‑if” video scenarios from incident footage, including body‑worn camera, dashcam, CCTV, and cell phone video.

These scenarios illustrate plausible alternatives and help reviewers visualize the risks that defensive actions were intended to prevent. They are not presented as factual reconstructions.

Instead, these outputs are advisory training and review materials designed to help evaluators better understand context, uncertainty, and decision‑making under stress.

Where This Helps

- Training and scenario‑based instruction

- Administrative and policy compliance review

- Arbitration and disciplinary proceedings

- Post‑incident / courtroom advisory preparation

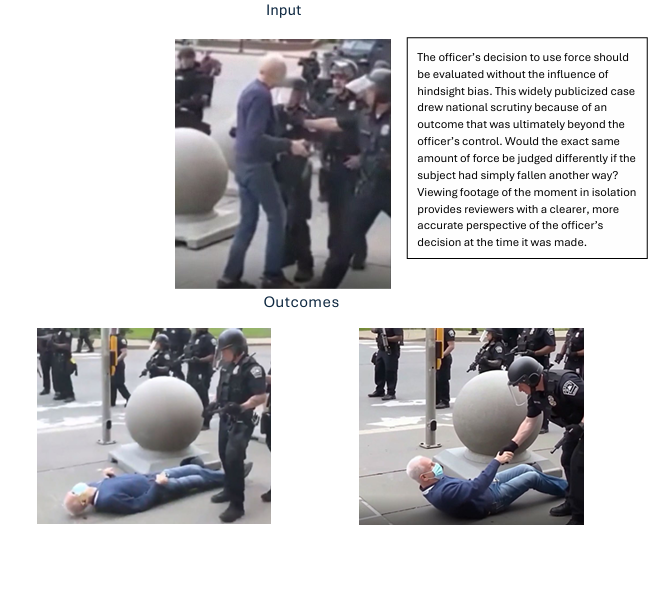

Sample Application: Visualizing Alternate Outcomes

The following example illustrates how AI‑generated alternate outcomes can help reviewers move beyond a single result and better understand decision‑making dynamics in real time.

By separating inputs from advisory outcomes, reviewers can more clearly evaluate what an officer reasonably perceived at the moment force was used—without the distorting influence of hindsight.

As of 2026, we have begun seeing AI‑generated use‑of‑force related videos circulating publicly, including those associated with the Renée Nicole Good ICE shooting. While such videos may draw attention, AI outputs generated without expert oversight often lack credibility and can mislead reviewers.

Force Outcomes exists to address this gap. Our platform ensures AI‑generated scenarios are created and reviewed by experienced use‑of‑force professionals, making them suitable for training, arbitration, and administrative review in a cost‑efficient, defensible manner.

The Bottom Line

AI doesn’t replace judgment—it improves understanding. By visualizing alternate outcomes, agencies can reduce outcome‑based scrutiny, improve training quality, and support fairer, more contextual review processes.

Want to see a sample?

Return to the homepage and request a sample of our work.